Our computational optics and AI-driven software stack are designed to merge the visible with the invisible in real time across multiple surfaces and devices. This enables people to act, react and perform with speed, awareness and impact. And our technology adapts and scales across different platforms and product ranges for maximum flexibility and cost-efficiency.

Core technology

Deep technology at industrial scale

Our approach to deep technology enables us to develop a highly advanced blend of hardware, software and computational optics at industrial scale for the most demanding use cases and users. This new paradigm with reality-centric design at its core is about aligning the capabilities of people and technology. We’re removing the limitations of current HUDs — like a narrow field of view, fixed depth planes and low visual quality — and introducing customizable and scalable solutions that enable the most extraordinary visual AI experiences ever imagined.

And we’re applying this to helmets and sight devices to visualize a previously unseen world — like operating comfortably in total darkness and navigating your surroundings with complete trust in the autonomous technology around you.

Through our unique blend of hardware and software, we’re unlocking superhuman sensing capabilities across the automotive, defence and aerospace industries.

Infinite pixel depth

Our technology creates an independent lightfield for each eye, allowing us to control the perceived distance of the content on a per-pixel level. This makes it possible to match virtual elements 1-to-1 with reality for a completely natural XR experience. For the first time ever, ultra high-resolution 3D imagery appears up close or further away with incredible levels of detail and accuracy.

Life-size FOV

Precise 3D visualizations appear on top of reality across the entire field of view — perfectly matching the observable world people see and experience around them. Other solutions confine virtual elements to a small viewing area, but with Distance visual AI is brought to life across the full windshield, visor or other display. Without any compromises or limitations.

Dynamic eye-box

Distance allows virtual content to be shown dynamically based on natural viewing angles and head movement. And with integrated eye-tracking, we make sure every image stays firmly in place and doesn’t randomly disappear from view — a common issue with traditional HUDs. Our technology offers a superior visual experience that always feels completely natural and intuitive.

3D super resolution

When it comes to visual quality and viewer comfort, we don’t make compromises. Our patented 3D super resolution creates unmatched levels of detail in both 3D and 2D images, and also in vectorized graphics — blending naturally into the real-world setting whenever it’s needed without creating any visual burden or discomfort.

Low volume, high impact

Our software-driven approach requires significantly less hardware volume compared to other solutions — offering a smart way to scale that’s flexible and cost efficient. For example, carmakers can easily implement mixed reality across their full portfolio with minimal design adjustments. Our technology scales across different industries and product ranges, maximizing the use of off-the-shelf display technology and then supercharging it without software and computational optics.

Distance Compositor

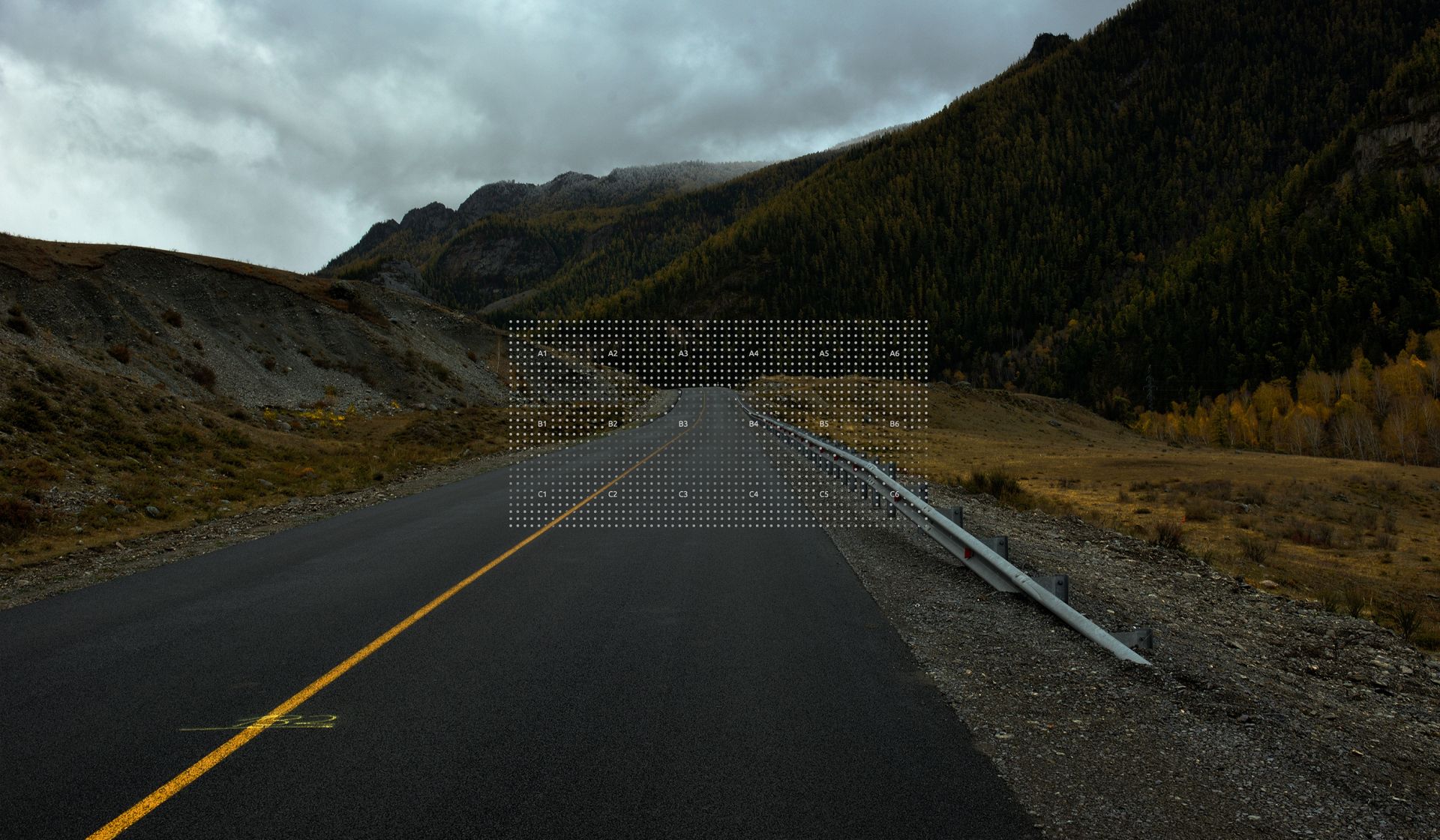

The Distance Compositor is the key piece of software that bridges the virtual and real worlds, keeping the two in perfect sync. This invisible component makes sure the synthetic content aligns perfectly in relation to the real-world environment — displaying real-time virtual imagery in the most natural way possible. All with ultra-low latency for an unrivaled mixed reality experience.

The New Front-End for Sensor Fusion

Distance's display technology structures perception. With infinite pixel depth, lightfield displays are no longer confined to representing color and brightness alone. Each pixel encodes meaningful, continuous-depth data, turning the display into a spatial interface rather than a flat screen.

This unlocks a new class of vision systems where the display becomes the front-end of sensor fusion. Instead of rendering pre-processed scenes, our 3D lightfield technology can directly visualize fused inputs from lidar, radar, and cameras in real time — all in a depth-native format. Human operators, autonomous systems, and AI agents can now visualize and interpret spatial relationships, object contours, and occlusions as intuitively as brightness or color.

By embedding depth at the pixel level, Distance enables machines — and their human partners — to operate not just with more data, but with perceptual context.

Our technology improves situational awareness, decision making and safety in fast-moving tactical environments. Adding value, not burden.

Elevated awareness. Enhanced safety and comfort. Our Lightfield HUD technology will transform how drivers interact with their surroundings — at any distance.